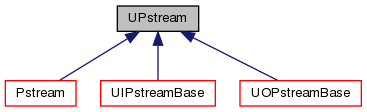

Inter-processor communications stream. More...

Classes | |

| class | commsStruct |

| Structure for communicating between processors. More... | |

| class | communicator |

| Wrapper class for allocating/freeing communicators. Always invokes allocateCommunicatorComponents() and freeCommunicatorComponents() More... | |

| class | Request |

An opaque wrapper for MPI_Request with a vendor-independent representation independent of any <mpi.h> header. More... | |

Public Types | |

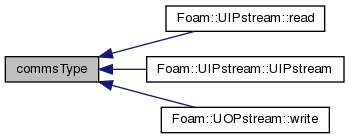

| enum | commsTypes : char { blocking, scheduled, nonBlocking } |

| Communications types. More... | |

| enum | sendModes : char { normal, sync } |

| Different MPI-send modes (ignored for commsTypes::blocking) More... | |

| typedef IntRange< int > | rangeType |

| Int ranges are used for MPI ranks (processes) More... | |

Public Member Functions | |

| ClassName ("UPstream") | |

| Declare name of the class and its debug switch. More... | |

| UPstream (const commsTypes commsType) noexcept | |

| Construct for given communication type. More... | |

| commsTypes | commsType () const noexcept |

| Get the communications type of the stream. More... | |

| commsTypes | commsType (const commsTypes ct) noexcept |

| Set the communications type of the stream. More... | |

| template<class T > | |

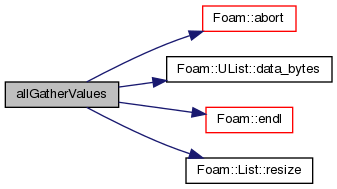

| Foam::List< T > | allGatherValues (const T &localValue, const label comm) |

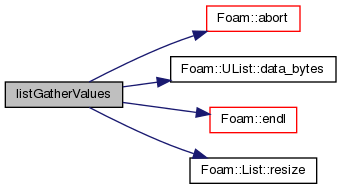

| template<class T > | |

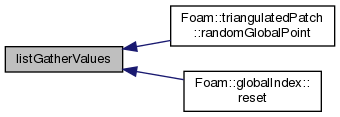

| Foam::List< T > | listGatherValues (const T &localValue, const label comm) |

Static Public Member Functions | |

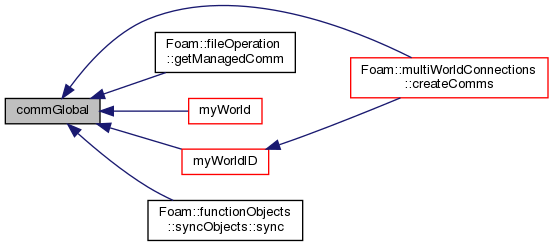

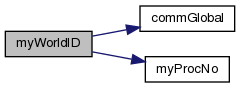

| static constexpr label | commGlobal () noexcept |

| Communicator for all ranks, irrespective of any local worlds. More... | |

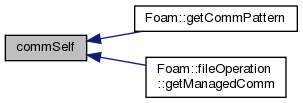

| static constexpr label | commSelf () noexcept |

| Communicator within the current rank only. More... | |

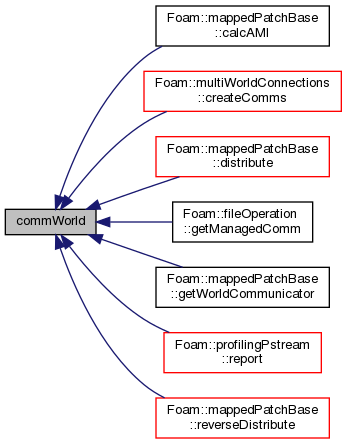

| static label | commWorld () noexcept |

| Communicator for all ranks (respecting any local worlds) More... | |

| static label | commWorld (const label communicator) noexcept |

| Set world communicator. Negative values are a no-op. More... | |

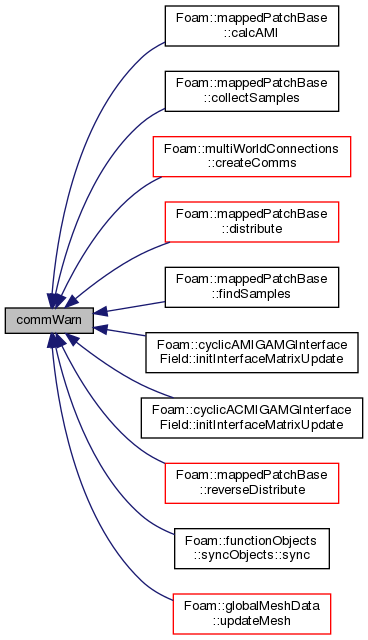

| static label | commWarn (const label communicator) noexcept |

| Alter communicator debugging setting. Warns for use of any communicator differing from specified. More... | |

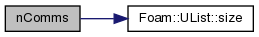

| static label | nComms () noexcept |

| Number of currently defined communicators. More... | |

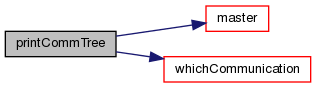

| static void | printCommTree (const label communicator) |

| Debugging: print the communication tree. More... | |

| static label | commIntraHost () |

| Demand-driven: Intra-host communicator (respects any local worlds) More... | |

| static label | commInterHost () |

| Demand-driven: Inter-host communicator (respects any local worlds) More... | |

| static bool | hasHostComms () |

| Test for presence of any intra or inter host communicators. More... | |

| static void | clearHostComms () |

| Remove any existing intra and inter host communicators. More... | |

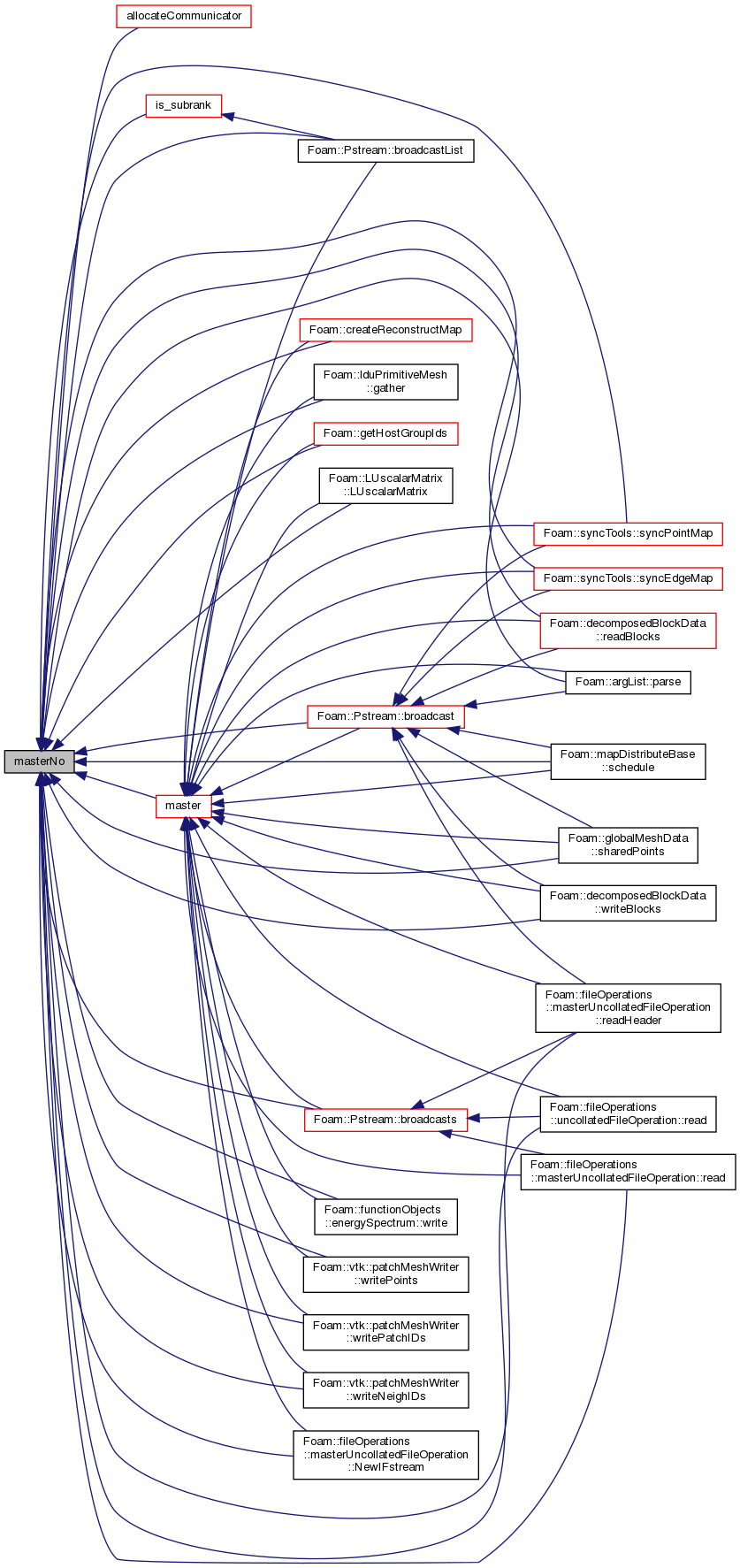

| static label | allocateCommunicator (const label parent, const labelRange &subRanks, const bool withComponents=true) |

| Allocate new communicator with contiguous sub-ranks on the parent communicator. More... | |

| static label | allocateCommunicator (const label parent, const labelUList &subRanks, const bool withComponents=true) |

| Allocate new communicator with sub-ranks on the parent communicator. More... | |

| static void | freeCommunicator (const label communicator, const bool withComponents=true) |

| Free a previously allocated communicator. More... | |

| static label | allocateInterHostCommunicator (const label parentCommunicator=worldComm) |

| Allocate an inter-host communicator. More... | |

| static label | allocateIntraHostCommunicator (const label parentCommunicator=worldComm) |

| Allocate an intra-host communicator. More... | |

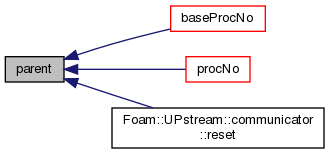

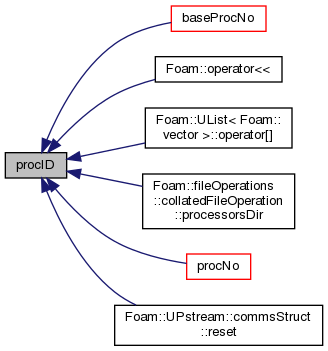

| static int | baseProcNo (label comm, int procID) |

| Return physical processor number (i.e. processor number in worldComm) given communicator and processor. More... | |

| static label | procNo (const label comm, const int baseProcID) |

| Return processor number in communicator (given physical processor number) (= reverse of baseProcNo) More... | |

| static label | procNo (const label comm, const label currentComm, const int currentProcID) |

| Return processor number in communicator (given processor number and communicator) More... | |

| static void | addValidParOptions (HashTable< string > &validParOptions) |

| Add the valid option this type of communications library adds/requires on the command line. More... | |

| static bool | init (int &argc, char **&argv, const bool needsThread) |

| Initialisation function called from main. More... | |

| static bool | initNull () |

| Special purpose initialisation function. More... | |

| static void | barrier (const label communicator, UPstream::Request *req=nullptr) |

| Impose a synchronisation barrier (optionally non-blocking) More... | |

| static std::pair< int, int > | probeMessage (const UPstream::commsTypes commsType, const int fromProcNo, const int tag=UPstream::msgType(), const label communicator=worldComm) |

| Probe for an incoming message. More... | |

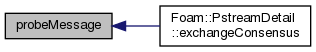

| static label | nRequests () noexcept |

| Number of outstanding requests (on the internal list of requests) More... | |

| static void | resetRequests (const label n) |

| Truncate outstanding requests to given length, which is expected to be in the range [0 to nRequests()]. More... | |

| static void | addRequest (UPstream::Request &req) |

| Transfer the (wrapped) MPI request to the internal global list. More... | |

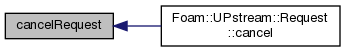

| static void | cancelRequest (const label i) |

| Non-blocking comms: cancel and free outstanding request. Corresponds to MPI_Cancel() + MPI_Request_free() More... | |

| static void | cancelRequest (UPstream::Request &req) |

| Non-blocking comms: cancel and free outstanding request. Corresponds to MPI_Cancel() + MPI_Request_free() More... | |

| static void | cancelRequests (UList< UPstream::Request > &requests) |

| Non-blocking comms: cancel and free outstanding requests. Corresponds to MPI_Cancel() + MPI_Request_free() More... | |

| static void | removeRequests (const label pos, label len=-1) |

| Non-blocking comms: cancel/free outstanding requests (from position onwards) and remove from internal list of requests. Corresponds to MPI_Cancel() + MPI_Request_free() More... | |

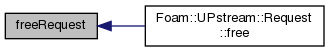

| static void | freeRequest (UPstream::Request &req) |

| Non-blocking comms: free outstanding request. Corresponds to MPI_Request_free() More... | |

| static void | freeRequests (UList< UPstream::Request > &requests) |

| Non-blocking comms: free outstanding requests. Corresponds to MPI_Request_free() More... | |

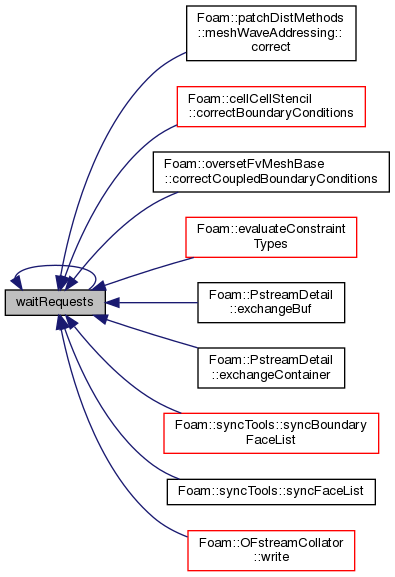

| static void | waitRequests (const label pos, label len=-1) |

| Wait until all requests (from position onwards) have finished. Corresponds to MPI_Waitall() More... | |

| static void | waitRequests (UList< UPstream::Request > &requests) |

| Wait until all requests have finished. Corresponds to MPI_Waitall() More... | |

| static bool | waitAnyRequest (const label pos, label len=-1) |

| Wait until any request (from position onwards) has finished. Corresponds to MPI_Waitany() More... | |

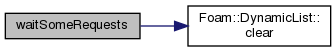

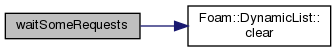

| static bool | waitSomeRequests (const label pos, label len=-1, DynamicList< int > *indices=nullptr) |

| Wait until some requests (from position onwards) have finished. Corresponds to MPI_Waitsome() More... | |

| static bool | waitSomeRequests (UList< UPstream::Request > &requests, DynamicList< int > *indices=nullptr) |

| Wait until some requests have finished. Corresponds to MPI_Waitsome() More... | |

| static label | waitAnyRequest (UList< UPstream::Request > &requests) |

| Wait until any request has finished and return its index. Corresponds to MPI_Waitany() More... | |

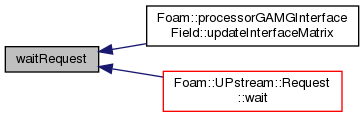

| static void | waitRequest (const label i) |

| Wait until request i has finished. Corresponds to MPI_Wait() More... | |

| static void | waitRequest (UPstream::Request &req) |

| Wait until specified request has finished. Corresponds to MPI_Wait() More... | |

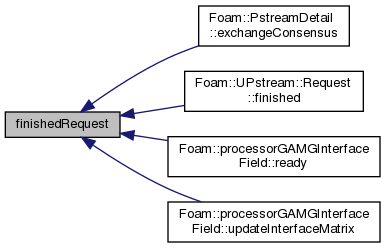

| static bool | finishedRequest (const label i) |

| Non-blocking comms: has request i finished? Corresponds to MPI_Test() More... | |

| static bool | finishedRequest (UPstream::Request &req) |

| Non-blocking comms: has request finished? Corresponds to MPI_Test() More... | |

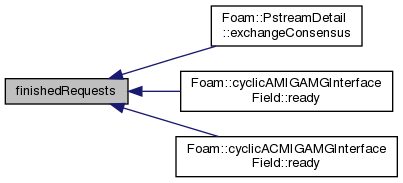

| static bool | finishedRequests (const label pos, label len=-1) |

| Non-blocking comms: have all requests (from position onwards) finished? Corresponds to MPI_Testall() More... | |

| static bool | finishedRequests (UList< UPstream::Request > &requests) |

| Non-blocking comms: have all requests finished? Corresponds to MPI_Testall() More... | |

| static bool | finishedRequestPair (label &req0, label &req1) |

| Non-blocking comms: have both requests finished? Corresponds to pair of MPI_Test() More... | |

| static void | waitRequestPair (label &req0, label &req1) |

| Non-blocking comms: wait for both requests to finish. Corresponds to pair of MPI_Wait() More... | |

| static bool | parRun (const bool on) noexcept |

| Set as parallel run on/off. More... | |

| static bool & | parRun () noexcept |

| Test if this a parallel run. More... | |

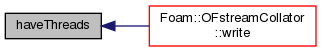

| static bool | haveThreads () noexcept |

| Have support for threads. More... | |

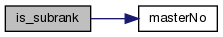

| static constexpr int | masterNo () noexcept |

| Relative rank for the master process - is always 0. More... | |

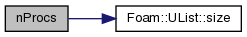

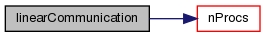

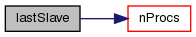

| static label | nProcs (const label communicator=worldComm) |

| Number of ranks in parallel run (for given communicator). It is 1 for serial run. More... | |

| static int | myProcNo (const label communicator=worldComm) |

| Rank of this process in the communicator (starting from masterNo()). Can be negative if the process is not a rank in the communicator. More... | |

| static bool | master (const label communicator=worldComm) |

| True if process corresponds to the master rank in the communicator. More... | |

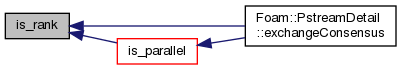

| static bool | is_rank (const label communicator=worldComm) |

| True if process corresponds to any rank (master or sub-rank) in the given communicator. More... | |

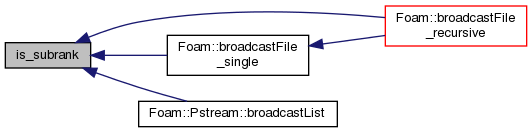

| static bool | is_subrank (const label communicator=worldComm) |

| True if process corresponds to a sub-rank in the given communicator. More... | |

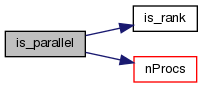

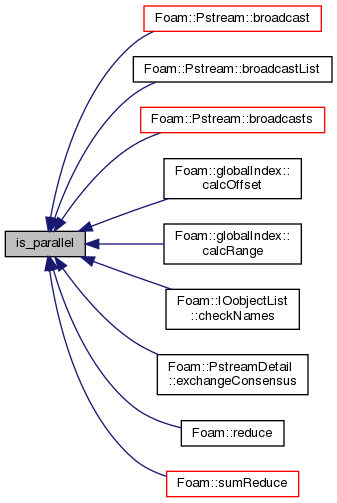

| static bool | is_parallel (const label communicator=worldComm) |

| True if parallel algorithm or exchange is required. More... | |

| static label | parent (const label communicator) |

| The parent communicator. More... | |

| static List< int > & | procID (const label communicator) |

| The list of ranks within a given communicator. More... | |

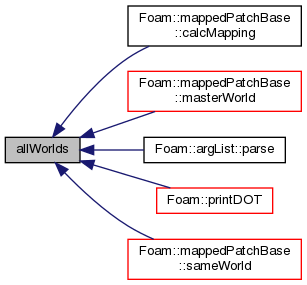

| static const wordList & | allWorlds () noexcept |

| All worlds. More... | |

| static const labelList & | worldIDs () noexcept |

| The indices into allWorlds for all processes. More... | |

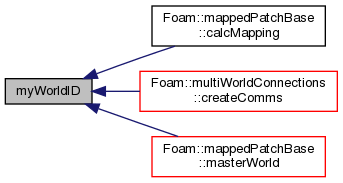

| static label | myWorldID () |

| My worldID. More... | |

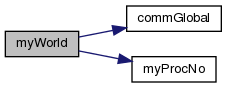

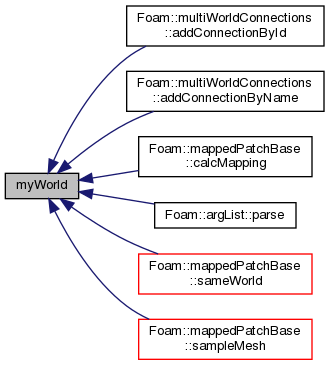

| static const word & | myWorld () |

| My world. More... | |

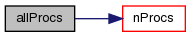

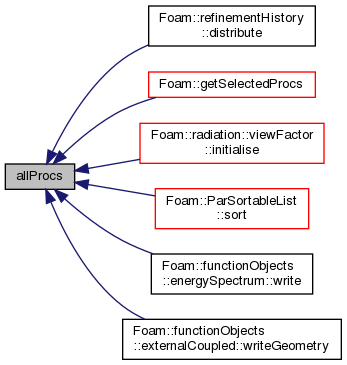

| static rangeType | allProcs (const label communicator=worldComm) |

| Range of process indices for all processes. More... | |

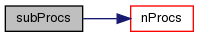

| static rangeType | subProcs (const label communicator=worldComm) |

| Range of process indices for sub-processes. More... | |

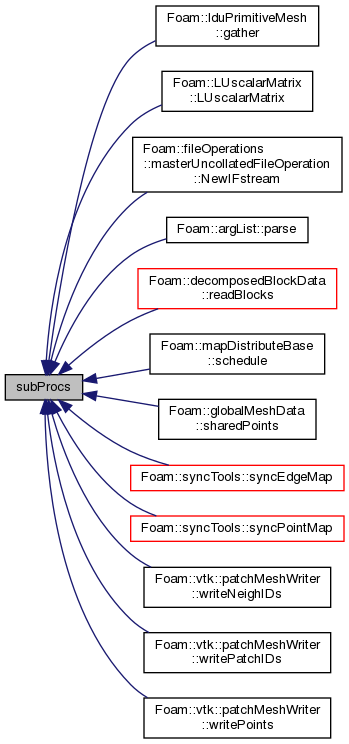

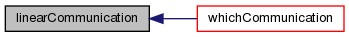

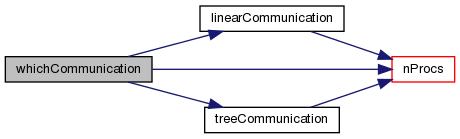

| static const List< commsStruct > & | linearCommunication (const label communicator=worldComm) |

| Communication schedule for linear all-to-master (proc 0) More... | |

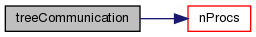

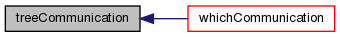

| static const List< commsStruct > & | treeCommunication (const label communicator=worldComm) |

| Communication schedule for tree all-to-master (proc 0) More... | |

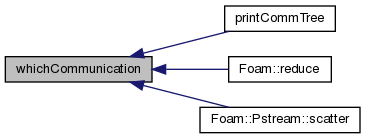

| static const List< commsStruct > & | whichCommunication (const label communicator=worldComm) |

| Communication schedule for linear/tree all-to-master (proc 0). Chooses based on the value of UPstream::nProcsSimpleSum. More... | |

| static int & | msgType () noexcept |

| Message tag of standard messages. More... | |

| static int | msgType (int val) noexcept |

| Set the message tag for standard messages. More... | |

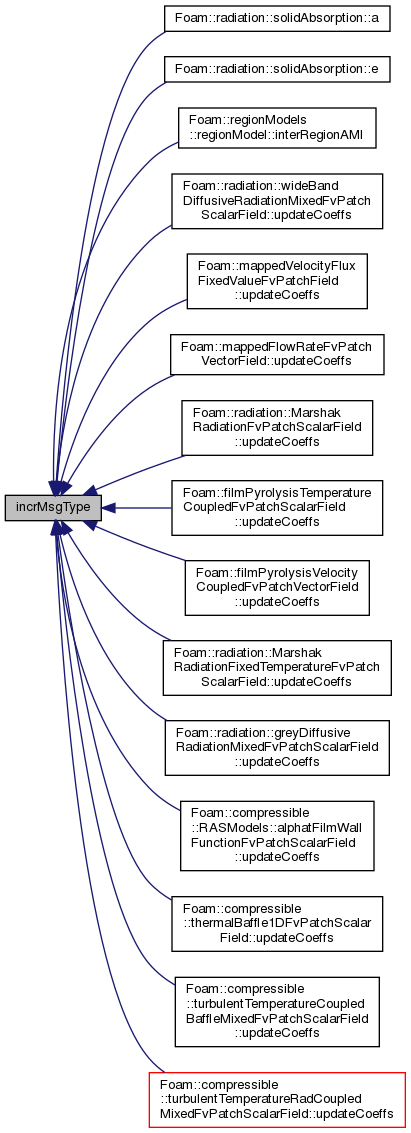

| static int | incrMsgType (int val=1) noexcept |

| Increment the message tag for standard messages. More... | |

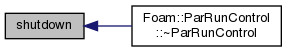

| static void | shutdown (int errNo=0) |

| Shutdown (finalize) MPI as required. More... | |

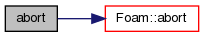

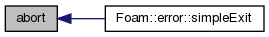

| static void | abort () |

| Call MPI_Abort with no other checks or cleanup. More... | |

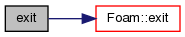

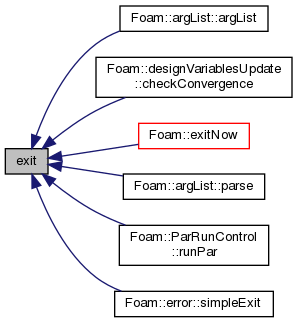

| static void | exit (int errNo=1) |

| Shutdown (finalize) MPI as required and exit program with errNo. More... | |

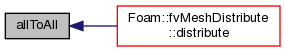

| static void | allToAll (const UList< int32_t > &sendData, UList< int32_t > &recvData, const label communicator=worldComm) |

Exchange int32_t data with all ranks in communicator. More... | |

| static void | allToAllConsensus (const UList< int32_t > &sendData, UList< int32_t > &recvData, const int tag, const label communicator=worldComm) |

Exchange non-zero int32_t data between ranks [NBX]. More... | |

| static void | allToAllConsensus (const Map< int32_t > &sendData, Map< int32_t > &recvData, const int tag, const label communicator=worldComm) |

Exchange int32_t data between ranks [NBX]. More... | |

| static Map< int32_t > | allToAllConsensus (const Map< int32_t > &sendData, const int tag, const label communicator=worldComm) |

Exchange int32_t data between ranks [NBX]. More... | |

| static void | allToAll (const UList< int64_t > &sendData, UList< int64_t > &recvData, const label communicator=worldComm) |

Exchange int64_t data with all ranks in communicator. More... | |

| static void | allToAllConsensus (const UList< int64_t > &sendData, UList< int64_t > &recvData, const int tag, const label communicator=worldComm) |

Exchange non-zero int64_t data between ranks [NBX]. More... | |

| static void | allToAllConsensus (const Map< int64_t > &sendData, Map< int64_t > &recvData, const int tag, const label communicator=worldComm) |

Exchange int64_t data between ranks [NBX]. More... | |

| static Map< int64_t > | allToAllConsensus (const Map< int64_t > &sendData, const int tag, const label communicator=worldComm) |

Exchange int64_t data between ranks [NBX]. More... | |

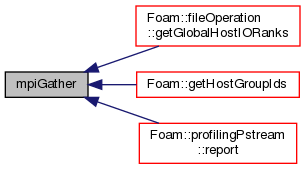

| static void | mpiGather (const char *sendData, char *recvData, int count, const label communicator=worldComm) |

Receive identically-sized char data from all ranks. More... | |

| static void | mpiScatter (const char *sendData, char *recvData, int count, const label communicator=worldComm) |

Send identically-sized char data to all ranks. More... | |

| static void | mpiAllGather (char *allData, int count, const label communicator=worldComm) |

Gather/scatter identically-sized char data. More... | |

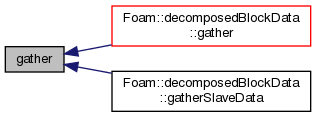

| static void | gather (const char *sendData, int sendCount, char *recvData, const UList< int > &recvCounts, const UList< int > &recvOffsets, const label communicator=worldComm) |

Receive variable length char data from all ranks. More... | |

| static void | scatter (const char *sendData, const UList< int > &sendCounts, const UList< int > &sendOffsets, char *recvData, int recvCount, const label communicator=worldComm) |

Send variable length char data to all ranks. More... | |

| static void | mpiGather (const int32_t *sendData, int32_t *recvData, int count, const label communicator=worldComm) |

Receive identically-sized int32_t data from all ranks. More... | |

| static void | mpiScatter (const int32_t *sendData, int32_t *recvData, int count, const label communicator=worldComm) |

Send identically-sized int32_t data to all ranks. More... | |

| static void | mpiAllGather (int32_t *allData, int count, const label communicator=worldComm) |

Gather/scatter identically-sized int32_t data. More... | |

| static void | gather (const int32_t *sendData, int sendCount, int32_t *recvData, const UList< int > &recvCounts, const UList< int > &recvOffsets, const label communicator=worldComm) |

Receive variable length int32_t data from all ranks. More... | |

| static void | scatter (const int32_t *sendData, const UList< int > &sendCounts, const UList< int > &sendOffsets, int32_t *recvData, int recvCount, const label communicator=worldComm) |

Send variable length int32_t data to all ranks. More... | |

| static void | mpiGather (const int64_t *sendData, int64_t *recvData, int count, const label communicator=worldComm) |

Receive identically-sized int64_t data from all ranks. More... | |

| static void | mpiScatter (const int64_t *sendData, int64_t *recvData, int count, const label communicator=worldComm) |

Send identically-sized int64_t data to all ranks. More... | |

| static void | mpiAllGather (int64_t *allData, int count, const label communicator=worldComm) |

Gather/scatter identically-sized int64_t data. More... | |

| static void | gather (const int64_t *sendData, int sendCount, int64_t *recvData, const UList< int > &recvCounts, const UList< int > &recvOffsets, const label communicator=worldComm) |

Receive variable length int64_t data from all ranks. More... | |

| static void | scatter (const int64_t *sendData, const UList< int > &sendCounts, const UList< int > &sendOffsets, int64_t *recvData, int recvCount, const label communicator=worldComm) |

Send variable length int64_t data to all ranks. More... | |

| static void | mpiGather (const uint32_t *sendData, uint32_t *recvData, int count, const label communicator=worldComm) |

Receive identically-sized uint32_t data from all ranks. More... | |

| static void | mpiScatter (const uint32_t *sendData, uint32_t *recvData, int count, const label communicator=worldComm) |

Send identically-sized uint32_t data to all ranks. More... | |

| static void | mpiAllGather (uint32_t *allData, int count, const label communicator=worldComm) |

Gather/scatter identically-sized uint32_t data. More... | |

| static void | gather (const uint32_t *sendData, int sendCount, uint32_t *recvData, const UList< int > &recvCounts, const UList< int > &recvOffsets, const label communicator=worldComm) |

Receive variable length uint32_t data from all ranks. More... | |

| static void | scatter (const uint32_t *sendData, const UList< int > &sendCounts, const UList< int > &sendOffsets, uint32_t *recvData, int recvCount, const label communicator=worldComm) |

Send variable length uint32_t data to all ranks. More... | |

| static void | mpiGather (const uint64_t *sendData, uint64_t *recvData, int count, const label communicator=worldComm) |

Receive identically-sized uint64_t data from all ranks. More... | |

| static void | mpiScatter (const uint64_t *sendData, uint64_t *recvData, int count, const label communicator=worldComm) |

Send identically-sized uint64_t data to all ranks. More... | |

| static void | mpiAllGather (uint64_t *allData, int count, const label communicator=worldComm) |

Gather/scatter identically-sized uint64_t data. More... | |

| static void | gather (const uint64_t *sendData, int sendCount, uint64_t *recvData, const UList< int > &recvCounts, const UList< int > &recvOffsets, const label communicator=worldComm) |

Receive variable length uint64_t data from all ranks. More... | |

| static void | scatter (const uint64_t *sendData, const UList< int > &sendCounts, const UList< int > &sendOffsets, uint64_t *recvData, int recvCount, const label communicator=worldComm) |

Send variable length uint64_t data to all ranks. More... | |

| static void | mpiGather (const float *sendData, float *recvData, int count, const label communicator=worldComm) |

Receive identically-sized float data from all ranks. More... | |

| static void | mpiScatter (const float *sendData, float *recvData, int count, const label communicator=worldComm) |

Send identically-sized float data to all ranks. More... | |

| static void | mpiAllGather (float *allData, int count, const label communicator=worldComm) |

Gather/scatter identically-sized float data. More... | |

| static void | gather (const float *sendData, int sendCount, float *recvData, const UList< int > &recvCounts, const UList< int > &recvOffsets, const label communicator=worldComm) |

Receive variable length float data from all ranks. More... | |

| static void | scatter (const float *sendData, const UList< int > &sendCounts, const UList< int > &sendOffsets, float *recvData, int recvCount, const label communicator=worldComm) |

Send variable length float data to all ranks. More... | |

| static void | mpiGather (const double *sendData, double *recvData, int count, const label communicator=worldComm) |

Receive identically-sized double data from all ranks. More... | |

| static void | mpiScatter (const double *sendData, double *recvData, int count, const label communicator=worldComm) |

Send identically-sized double data to all ranks. More... | |

| static void | mpiAllGather (double *allData, int count, const label communicator=worldComm) |

Gather/scatter identically-sized double data. More... | |

| static void | gather (const double *sendData, int sendCount, double *recvData, const UList< int > &recvCounts, const UList< int > &recvOffsets, const label communicator=worldComm) |

Receive variable length double data from all ranks. More... | |

| static void | scatter (const double *sendData, const UList< int > &sendCounts, const UList< int > &sendOffsets, double *recvData, int recvCount, const label communicator=worldComm) |

Send variable length double data to all ranks. More... | |

| template<class T > | |

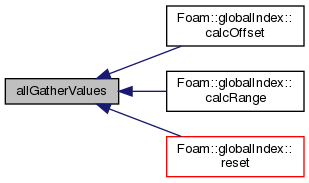

| static List< T > | allGatherValues (const T &localValue, const label communicator=worldComm) |

| Allgather individual values into list locations. More... | |

| template<class T > | |

| static List< T > | listGatherValues (const T &localValue, const label communicator=worldComm) |

| Gather individual values into list locations. More... | |

| template<class T > | |

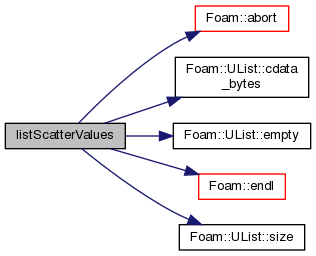

| static T | listScatterValues (const UList< T > &allValues, const label communicator=worldComm) |

| Scatter individual values from list locations. More... | |

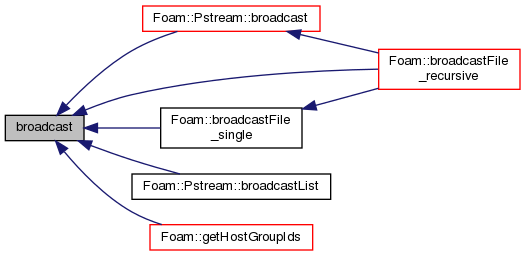

| static bool | broadcast (char *buf, const std::streamsize bufSize, const label communicator, const int rootProcNo=masterNo()) |

| Broadcast buffer contents to all processes in given communicator. The sizes must match on all processes. More... | |

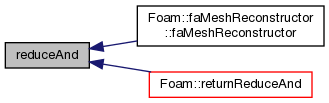

| static void | reduceAnd (bool &value, const label communicator=worldComm) |

| Logical (and) reduction (MPI_AllReduce) More... | |

| static void | reduceOr (bool &value, const label communicator=worldComm) |

| Logical (or) reduction (MPI_AllReduce) More... | |

| static void | waitRequests () |

| Wait for all requests to finish. More... | |

| static constexpr int | firstSlave () noexcept |

| Process index of first sub-process. More... | |

| static int | lastSlave (const label communicator=worldComm) |

| Process index of last sub-process. More... | |

Static Public Attributes | |

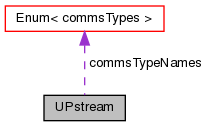

| static const Enum< commsTypes > | commsTypeNames |

| Enumerated names for the communication types. More... | |

| static bool | floatTransfer |

| Should compact transfer be used in which floats replace doubles reducing the bandwidth requirement at the expense of some loss in accuracy. More... | |

| static int | nProcsSimpleSum |

| Number of processors to change from linear to tree communication. More... | |

| static int | nProcsNonblockingExchange |

| Number of processors to change to nonBlocking consensual exchange (NBX). Ignored for zero or negative values. More... | |

| static int | nPollProcInterfaces |

| Number of polling cycles in processor updates. More... | |

| static commsTypes | defaultCommsType |

| Default commsType. More... | |

| static int | maxCommsSize |

| Optional maximum message size (bytes) More... | |

| static int | tuning_NBX_ |

| Tuning parameters for non-blocking exchange (NBX) More... | |

| static const int | mpiBufferSize |

| MPI buffer-size (bytes) More... | |

| static label | worldComm |

| Communicator for all ranks. May differ from commGlobal() if local worlds are in use. More... | |

| static label | warnComm |

| Debugging: warn for use of any communicator differing from warnComm. More... | |

Int ranges are used for MPI ranks (processes)

Definition at line 67 of file UPstream.H.

|

strong |

Communications types.

| Enumerator | |

|---|---|

| blocking | "blocking" : (MPI_Bsend, MPI_Recv) |

| scheduled | "scheduled" : (MPI_Send, MPI_Recv) |

| nonBlocking | "nonBlocking" : (MPI_Isend, MPI_Irecv) |

Definition at line 72 of file UPstream.H.

|

strong |

Different MPI-send modes (ignored for commsTypes::blocking)

| Enumerator | |

|---|---|

| normal | (MPI_Send, MPI_Isend) |

| sync | (MPI_Ssend, MPI_Issend) |

Definition at line 87 of file UPstream.H.

|

inlineexplicitnoexcept |

Construct for given communication type.

Definition at line 495 of file UPstream.H.

| ClassName | ( | "UPstream" | ) |

Declare name of the class and its debug switch.

|

inlinestaticnoexcept |

Communicator for all ranks, irrespective of any local worlds.

Definition at line 419 of file UPstream.H.

Referenced by multiWorldConnections::createComms(), fileOperation::getManagedComm(), UPstream::myWorld(), UPstream::myWorldID(), and syncObjects::sync().

|

inlinestaticnoexcept |

Communicator within the current rank only.

Definition at line 424 of file UPstream.H.

Referenced by Foam::getCommPattern(), and fileOperation::getManagedComm().

|

inlinestaticnoexcept |

Communicator for all ranks (respecting any local worlds)

Definition at line 429 of file UPstream.H.

References UPstream::worldComm.

Referenced by mappedPatchBase::calcAMI(), multiWorldConnections::createComms(), mappedPatchBase::distribute(), fileOperation::getManagedComm(), mappedPatchBase::getWorldCommunicator(), profilingPstream::report(), and mappedPatchBase::reverseDistribute().

|

inlinestaticnoexcept |

Set world communicator. Negative values are a no-op.

Definition at line 436 of file UPstream.H.

References UPstream::worldComm.

|

inlinestaticnoexcept |

Alter communicator debugging setting. Warns for use of any communicator differing from specified.

Definition at line 449 of file UPstream.H.

References UPstream::warnComm.

Referenced by mappedPatchBase::calcAMI(), mappedPatchBase::collectSamples(), multiWorldConnections::createComms(), mappedPatchBase::distribute(), mappedPatchBase::findSamples(), cyclicAMIGAMGInterfaceField::initInterfaceMatrixUpdate(), cyclicACMIGAMGInterfaceField::initInterfaceMatrixUpdate(), mappedPatchBase::reverseDistribute(), syncObjects::sync(), and globalMeshData::updateMesh().

|

inlinestaticnoexcept |

Number of currently defined communicators.

Definition at line 459 of file UPstream.H.

References UList< T >::size().

|

static |

Debugging: print the communication tree.

Definition at line 671 of file UPstream.C.

References Foam::Info, UPstream::master(), and UPstream::whichCommunication().

|

static |

Demand-driven: Intra-host communicator (respects any local worlds)

Definition at line 682 of file UPstream.C.

|

static |

Demand-driven: Inter-host communicator (respects any local worlds)

Definition at line 696 of file UPstream.C.

|

static |

Test for presence of any intra or inter host communicators.

Definition at line 710 of file UPstream.C.

|

static |

Remove any existing intra and inter host communicators.

Definition at line 716 of file UPstream.C.

|

static |

Allocate new communicator with contiguous sub-ranks on the parent communicator.

if (myProcNo_[index] < 0 && parentIndex >= 0) { // As global rank myProcNo_[index] = -(myProcNo_[worldComm]+1);

OR: // As parent rank number if (myProcNo_[parentIndex] >= 0) { myProcNo_[index] = -(myProcNo_[parentIndex]+1); } }

| parent | The parent communicator |

| subRanks | The contiguous sub-ranks of parent to use |

| withComponents | Call allocateCommunicatorComponents() |

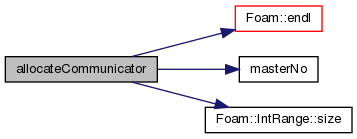

Definition at line 258 of file UPstream.C.

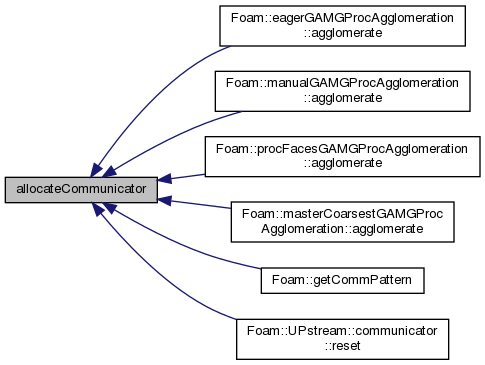

References Foam::ensightOutput::debug, Foam::endl(), UPstream::masterNo(), Foam::nl, Foam::Pout, and IntRange< IntType >::size().

Referenced by eagerGAMGProcAgglomeration::agglomerate(), manualGAMGProcAgglomeration::agglomerate(), procFacesGAMGProcAgglomeration::agglomerate(), masterCoarsestGAMGProcAgglomeration::agglomerate(), Foam::getCommPattern(), and UPstream::communicator::reset().

|

static |

Allocate new communicator with sub-ranks on the parent communicator.

if (myProcNo_[index] < 0 && parentIndex >= 0) { // As global rank myProcNo_[index] = -(myProcNo_[worldComm]+1);

OR: // As parent rank number if (myProcNo_[parentIndex] >= 0) { myProcNo_[index] = -(myProcNo_[parentIndex]+1); } }

| parent | The parent communicator |

| subRanks | The sub-ranks of parent to use (ignore negative values) |

| withComponents | Call allocateCommunicatorComponents() |

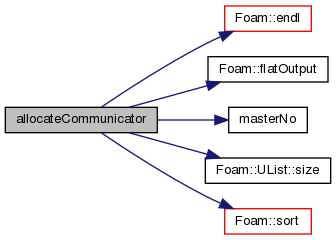

Definition at line 319 of file UPstream.C.

References Foam::ensightOutput::debug, Foam::endl(), Foam::flatOutput(), UPstream::masterNo(), Foam::nl, Foam::Pout, UList< T >::size(), and Foam::sort().

|

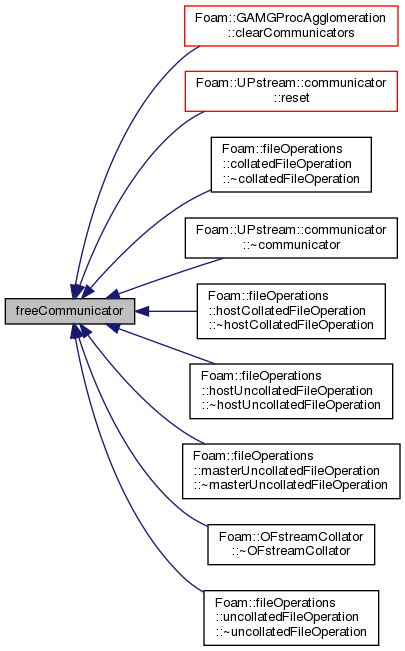

static |

Free a previously allocated communicator.

Ignores placeholder (negative) communicators.

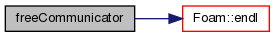

Definition at line 565 of file UPstream.C.

References Foam::ensightOutput::debug, Foam::endl(), and Foam::Pout.

Referenced by GAMGProcAgglomeration::clearCommunicators(), UPstream::communicator::reset(), collatedFileOperation::~collatedFileOperation(), UPstream::communicator::~communicator(), hostCollatedFileOperation::~hostCollatedFileOperation(), hostUncollatedFileOperation::~hostUncollatedFileOperation(), masterUncollatedFileOperation::~masterUncollatedFileOperation(), OFstreamCollator::~OFstreamCollator(), and uncollatedFileOperation::~uncollatedFileOperation().

|

static |

Allocate an inter-host communicator.

Definition at line 401 of file UPstream.C.

References forAll, Foam::getHostGroupIds(), and UList< T >::size().

|

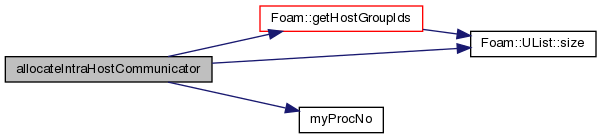

static |

Allocate an intra-host communicator.

Definition at line 424 of file UPstream.C.

References forAll, Foam::getHostGroupIds(), UPstream::myProcNo(), and UList< T >::size().

|

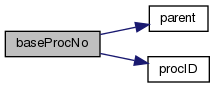

static |

Return physical processor number (i.e. processor number in worldComm) given communicator and processor.

Definition at line 604 of file UPstream.C.

References UPstream::parent(), and UPstream::procID().

Referenced by UPstream::procNo().

|

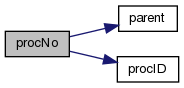

static |

Return processor number in communicator (given physical processor number) (= reverse of baseProcNo)

Definition at line 617 of file UPstream.C.

References UPstream::parent(), and UPstream::procID().

Referenced by UPstream::procNo().

|

static |

Return processor number in communicator (given processor number and communicator)

Definition at line 634 of file UPstream.C.

References UPstream::baseProcNo(), and UPstream::procNo().

Add the valid option this type of communications library adds/requires on the command line.

Definition at line 26 of file UPstream.C.

|

static |

Initialisation function called from main.

Spawns sub-processes and initialises inter-communication

Definition at line 40 of file UPstream.C.

References Foam::endl(), Foam::exit(), Foam::FatalError, and FatalErrorInFunction.

Referenced by ParRunControl::runPar().

|

static |

Special purpose initialisation function.

Performs a basic MPI_Init without any other setup. Only used for applications that need MPI communication when OpenFOAM is running in a non-parallel mode.

Definition at line 30 of file UPstream.C.

References Foam::endl(), and WarningInFunction.

|

static |

Impose a synchronisation barrier (optionally non-blocking)

Definition at line 83 of file UPstream.C.

Referenced by Foam::PstreamDetail::exchangeConsensus().

|

static |

Probe for an incoming message.

| commsType | Blocking or not |

| fromProcNo | The source rank (negative == ANY_SOURCE) |

| tag | The source message tag |

| communicator | The communicator index |

Definition at line 89 of file UPstream.C.

Referenced by Foam::PstreamDetail::exchangeConsensus().

|

staticnoexcept |

Number of outstanding requests (on the internal list of requests)

Definition at line 45 of file UPstreamRequest.C.

Referenced by meshWaveAddressing::correct(), cellCellStencil::correctBoundaryConditions(), oversetFvMeshBase::correctCoupledBoundaryConditions(), Foam::evaluateConstraintTypes(), Foam::PstreamDetail::exchangeBuf(), Foam::PstreamDetail::exchangeContainer(), processorGAMGInterfaceField::initInterfaceMatrixUpdate(), syncTools::syncBoundaryFaceList(), syncTools::syncFaceList(), and OFstreamCollator::write().

|

static |

Truncate outstanding requests to given length, which is expected to be in the range [0 to nRequests()].

A no-op for out-of-range values.

Definition at line 47 of file UPstreamRequest.C.

|

static |

Transfer the (wrapped) MPI request to the internal global list.

A no-op for non-parallel. No special treatment for null requests.

Definition at line 49 of file UPstreamRequest.C.

|

static |

Non-blocking comms: cancel and free outstanding request. Corresponds to MPI_Cancel() + MPI_Request_free()

A no-op if parRun() == false if there are no pending requests, or if the index is out-of-range (0 to nRequests)

Definition at line 51 of file UPstreamRequest.C.

Referenced by UPstream::Request::cancel().

|

static |

Non-blocking comms: cancel and free outstanding request. Corresponds to MPI_Cancel() + MPI_Request_free()

A no-op if parRun() == false

Definition at line 52 of file UPstreamRequest.C.

|

static |

Non-blocking comms: cancel and free outstanding requests. Corresponds to MPI_Cancel() + MPI_Request_free()

A no-op if parRun() == false or list is empty

Definition at line 53 of file UPstreamRequest.C.

|

static |

Non-blocking comms: cancel/free outstanding requests (from position onwards) and remove from internal list of requests. Corresponds to MPI_Cancel() + MPI_Request_free()

A no-op if parRun() == false, if the position is out-of-range [0 to nRequests()], or the internal list of requests is empty.

| pos | starting position within the internal list of requests |

| len | length of slice to remove (negative = until the end) |

Definition at line 55 of file UPstreamRequest.C.

|

static |

Non-blocking comms: free outstanding request. Corresponds to MPI_Request_free()

A no-op if parRun() == false

Definition at line 57 of file UPstreamRequest.C.

Referenced by UPstream::Request::free().

|

static |

Non-blocking comms: free outstanding requests. Corresponds to MPI_Request_free()

A no-op if parRun() == false or list is empty

Definition at line 58 of file UPstreamRequest.C.

|

static |

Wait until all requests (from position onwards) have finished. Corresponds to MPI_Waitall()

A no-op if parRun() == false, if the position is out-of-range [0 to nRequests()], or the internal list of requests is empty.

If checking a trailing portion of the list, it will also trim the list of outstanding requests as a side-effect. This is a feature (not a bug) to conveniently manange the list.

| pos | starting position within the internal list of requests |

| len | length of slice to check (negative = until the end) |

Definition at line 60 of file UPstreamRequest.C.

|

static |

Wait until all requests have finished. Corresponds to MPI_Waitall()

A no-op if parRun() == false, or the list is empty.

Definition at line 61 of file UPstreamRequest.C.

|

static |

Wait until any request (from position onwards) has finished. Corresponds to MPI_Waitany()

A no-op and returns false if parRun() == false, if the position is out-of-range [0 to nRequests()], or the internal list of requests is empty.

| pos | starting position within the internal list of requests |

| len | length of slice to check (negative = until the end) |

Definition at line 63 of file UPstreamRequest.C.

|

static |

Wait until some requests (from position onwards) have finished. Corresponds to MPI_Waitsome()

A no-op and returns false if parRun() == false, if the position is out-of-range [0 to nRequests], or the internal list of requests is empty.

| pos | starting position within the internal list of requests | |

| len | length of slice to check (negative = until the end) | |

| [out] | indices | the completed request indices relative to the starting position. This is an optional parameter that can be used to recover the indices or simply to avoid reallocations when calling within a loop. |

Definition at line 69 of file UPstreamRequest.C.

References DynamicList< T, SizeMin >::clear().

|

static |

Wait until some requests have finished. Corresponds to MPI_Waitsome()

A no-op and returns false if parRun() == false, the list is empty, or if all the requests have already been handled.

| requests | the requests | |

| [out] | indices | the completed request indices relative to the starting position. This is an optional parameter that can be used to recover the indices or simply to avoid reallocations when calling within a loop. |

Definition at line 80 of file UPstreamRequest.C.

References DynamicList< T, SizeMin >::clear().

|

static |

Wait until any request has finished and return its index. Corresponds to MPI_Waitany()

Returns -1 if parRun() == false, or the list is empty, or if all the requests have already been handled

Definition at line 89 of file UPstreamRequest.C.

|

static |

Wait until request i has finished. Corresponds to MPI_Wait()

A no-op if parRun() == false, if there are no pending requests, or if the index is out-of-range (0 to nRequests)

void Foam::UPstream::waitRequests ( UPstream::Request& req0, UPstream::Request& req1 ) { // No-op for non-parallel if (!UPstream::parRun()) { return; }

int count = 0; MPI_Request waitRequests[2];

waitRequests[count] = PstreamDetail::Request::get(req0); if (MPI_REQUEST_NULL != waitRequests[count]) { ++count; }

waitRequests[count] = PstreamDetail::Request::get(req1); if (MPI_REQUEST_NULL != waitRequests[count]) { ++count; }

// Flag in advance as being handled req0 = UPstream::Request(MPI_REQUEST_NULL); req1 = UPstream::Request(MPI_REQUEST_NULL);

if (!count) { return; }

profilingPstream::beginTiming();

// On success: sets each request to MPI_REQUEST_NULL if (MPI_Waitall(count, waitRequests, MPI_STATUSES_IGNORE)) { FatalErrorInFunction << "MPI_Waitall returned with error" << Foam::abort(FatalError); }

profilingPstream::addWaitTime(); }

Definition at line 94 of file UPstreamRequest.C.

Referenced by processorGAMGInterfaceField::updateInterfaceMatrix(), and UPstream::Request::wait().

|

static |

Wait until specified request has finished. Corresponds to MPI_Wait()

A no-op if parRun() == false or for a null-request

Definition at line 95 of file UPstreamRequest.C.

|

static |

Non-blocking comms: has request i finished? Corresponds to MPI_Test()

A no-op and returns true if parRun() == false, if there are no pending requests, or if the index is out-of-range (0 to nRequests)

Definition at line 97 of file UPstreamRequest.C.

Referenced by Foam::PstreamDetail::exchangeConsensus(), UPstream::Request::finished(), processorGAMGInterfaceField::ready(), and processorGAMGInterfaceField::updateInterfaceMatrix().

|

static |

Non-blocking comms: has request finished? Corresponds to MPI_Test()

A no-op and returns true if parRun() == false or for a null-request

Definition at line 98 of file UPstreamRequest.C.

|

static |

Non-blocking comms: have all requests (from position onwards) finished? Corresponds to MPI_Testall()

A no-op and returns true if parRun() == false, if there are no pending requests, or if the index is out-of-range (0 to nRequests) or the addressed range is empty etc.

| pos | starting position within the internal list of requests |

| len | length of slice to check (negative = until the end) |

Definition at line 100 of file UPstreamRequest.C.

Referenced by Foam::PstreamDetail::exchangeConsensus(), cyclicAMIGAMGInterfaceField::ready(), and cyclicACMIGAMGInterfaceField::ready().

|

static |

Non-blocking comms: have all requests finished? Corresponds to MPI_Testall()

A no-op and returns true if parRun() == false or list is empty

Definition at line 106 of file UPstreamRequest.C.

|

static |

Non-blocking comms: have both requests finished? Corresponds to pair of MPI_Test()

A no-op and returns true if parRun() == false, if there are no pending requests, or if the indices are out-of-range (0 to nRequests) Each finished request parameter is set to -1 (ie, done).

Definition at line 112 of file UPstreamRequest.C.

|

static |

Non-blocking comms: wait for both requests to finish. Corresponds to pair of MPI_Wait()

A no-op if parRun() == false, if there are no pending requests, or if the indices are out-of-range (0 to nRequests) Each finished request parameter is set to -1 (ie, done).

Definition at line 120 of file UPstreamRequest.C.

|

inlinestaticnoexcept |

|

inlinestaticnoexcept |

Test if this a parallel run.

Modify access is deprecated

Definition at line 1049 of file UPstream.H.

Referenced by snappyLayerDriver::addLayers(), unwatchedIOdictionary::addWatch(), regIOobject::addWatch(), masterUncollatedFileOperation::addWatch(), masterUncollatedFileOperation::addWatches(), meshRefinement::balance(), addPatchCellLayer::calcExtrudeInfo(), processorCyclicPolyPatch::calcGeometry(), processorPolyPatch::calcGeometry(), processorFaPatch::calcGeometry(), surfaceNoise::calculate(), cyclicAMIPolyPatch::canResetAMI(), designVariablesUpdate::checkConvergence(), faBoundaryMesh::checkParallelSync(), polyBoundaryMesh::checkParallelSync(), AMIInterpolation::checkSymmetricWeights(), fieldValue::combineFields(), limitTurbulenceViscosity::correct(), cellCellStencil::correctBoundaryConditions(), meshRefinement::countEdgeFaces(), processorFvPatch::coupled(), cyclicAMIPointPatch::coupled(), processorPointPatchField< Type >::coupled(), processorFvsPatchField< Type >::coupled(), processorCyclicFvsPatchField< Type >::coupled(), processorFaePatchField< Type >::coupled(), processorCyclicPointPatchField< Type >::coupled(), calculatedProcessorFvPatchField< Type >::coupled(), processorFaPatchField< Type >::coupled(), cyclicACMIFvPatch::coupled(), processorFvPatchField< Type >::coupled(), processorFaPatch::coupled(), cyclicAMIFvPatch::coupled(), processorPolyPatch::coupled(), simpleGeomDecomp::decompose(), decompositionMethod::decompose(), conformalVoronoiMesh::decomposition(), processorFvPatch::delta(), processorFaPatch::delta(), masterUncollatedFileOperation::dirPath(), refinementHistory::distribute(), fvMeshDistribute::distribute(), distributedTriSurfaceMesh::distribute(), distributedTriSurfaceMesh::distributedTriSurfaceMesh(), snappyLayerDriver::doLayers(), snappyRefineDriver::doRefine(), ensightSurfaceReader::ensightSurfaceReader(), Foam::exitNow(), faMeshReconstructor::faMeshReconstructor(), masterUncollatedFileOperation::filePath(), polyMesh::findCell(), masterUncollatedFileOperation::findInstance(), distributedTriSurfaceMesh::findLine(), distributedTriSurfaceMesh::findLineAll(), distributedTriSurfaceMesh::findLineAny(), distributedTriSurfaceMesh::findNearest(), masterUncollatedFileOperation::findTimes(), masterUncollatedFileOperation::findWatch(), ensightSurfaceReader::geometry(), Foam::getCommPattern(), zoneDistribute::getDatafromOtherProc(), distributedTriSurfaceMesh::getField(), masterUncollatedFileOperation::getFile(), distributedTriSurfaceMesh::getNormal(), distributedTriSurfaceMesh::getRegion(), masterUncollatedFileOperation::getState(), distributedTriSurfaceMesh::getVolumeType(), faMeshBoundaryHalo::haloSize(), InflationInjection< CloudType >::InflationInjection(), faMesh::init(), processorPolyPatch::initGeometry(), processorFaPatch::initGeometry(), InjectedParticleInjection< CloudType >::initialise(), InjectedParticleDistributionInjection< CloudType >::initialise(), extractEulerianParticles::initialiseBins(), processorPolyPatch::initOrder(), processorPolyPatch::initUpdateMesh(), processorFaPatch::initUpdateMesh(), fileOperation::isIOrank(), fileOperation::lookupAndCacheProcessorsPath(), LUscalarMatrix::LUscalarMatrix(), processorFaPatch::makeDeltaCoeffs(), processorFaPatch::makeNonGlobalPatchPoints(), processorFvPatch::makeWeights(), processorFaPatch::makeWeights(), error::master(), mergedSurf::merge(), surfaceWriter::merge(), surfaceWriter::mergeFieldTemplate(), polyBoundaryMesh::neighbourEdges(), masterUncollatedFileOperation::NewIFstream(), faMeshTools::newMesh(), fvMeshTools::newMesh(), fileWriter::open(), processorPolyPatch::order(), InflationInjection< CloudType >::parcelsToInject(), argList::parse(), meshRefinement::printMeshInfo(), processorTopology::procAdjacency(), collatedFileOperation::processorsDir(), surfaceNoise::read(), uncollatedFileOperation::read(), masterUncollatedFileOperation::read(), lumpedPointState::readData(), Time::readModifiedObjects(), masterUncollatedFileOperation::readObjects(), masterUncollatedFileOperation::readStream(), surfaceNoise::readSurfaceData(), masterUncollatedFileOperation::removeWatch(), parProfiling::report(), profilingPstream::report(), AMIWeights::reportPatch(), faMeshBoundaryHalo::reset(), fvMeshSubset::reset(), globalIndex::reset(), mapDistributeBase::schedule(), Time::setControls(), surfaceWriter::setSurface(), masterUncollatedFileOperation::setUnmodified(), zoneDistribute::setUpCommforZone(), globalMeshData::sharedPoints(), shortestPathSet::shortestPathSet(), error::simpleExit(), messageStream::stream(), surfaceWriter::surface(), surfaceNoise::surfaceAverage(), syncObjects::sync(), masterUncollatedFileOperation::sync(), syncTools::syncEdgeMap(), faMesh::syncGeom(), syncTools::syncPointMap(), triSurfaceMesh::triSurfaceMesh(), fileOperation::uniformFile(), turbulentDFSEMInletFvPatchVectorField::updateCoeffs(), processorPolyPatch::updateMesh(), processorFaPatch::updateMesh(), faMesh::updateMesh(), masterUncollatedFileOperation::updateStates(), fileOperation::updateStates(), dynamicCode::waitForFile(), energySpectrum::write(), ensightCells::write(), vtkWrite::write(), meshToMeshMethod::writeConnectivity(), AMIWeights::writeFileHeader(), fieldMinMax::writeFileHeader(), isoAdvection::writeIsoFaces(), faMeshReconstructor::writeMesh(), patchMeshWriter::writeNeighIDs(), collatedFileOperation::writeObject(), fileWriter::writeProcIDs(), surfaceNoise::writeSurfaceData(), and streamLineBase::writeToFile().

|

inlinestaticnoexcept |

Have support for threads.

Definition at line 1054 of file UPstream.H.

Referenced by OFstreamCollator::write().

|

inlinestaticnoexcept |

Relative rank for the master process - is always 0.

Definition at line 1059 of file UPstream.H.

Referenced by UPstream::allocateCommunicator(), Pstream::broadcast(), Pstream::broadcastList(), Pstream::broadcasts(), Foam::createReconstructMap(), lduPrimitiveMesh::gather(), Foam::getHostGroupIds(), UPstream::is_subrank(), LUscalarMatrix::LUscalarMatrix(), UPstream::master(), masterUncollatedFileOperation::NewIFstream(), argList::parse(), uncollatedFileOperation::read(), masterUncollatedFileOperation::read(), decomposedBlockData::readBlocks(), masterUncollatedFileOperation::readHeader(), mapDistributeBase::schedule(), globalMeshData::sharedPoints(), syncTools::syncEdgeMap(), syncTools::syncPointMap(), energySpectrum::write(), decomposedBlockData::writeBlocks(), patchMeshWriter::writeNeighIDs(), patchMeshWriter::writePatchIDs(), and patchMeshWriter::writePoints().

|

inlinestatic |

Number of ranks in parallel run (for given communicator). It is 1 for serial run.

Definition at line 1065 of file UPstream.H.

References UList< T >::size().

Referenced by surfaceZonesInfo::addCellZonesToMesh(), surfaceZonesInfo::addFaceZonesToMesh(), eagerGAMGProcAgglomeration::agglomerate(), manualGAMGProcAgglomeration::agglomerate(), procFacesGAMGProcAgglomeration::agglomerate(), masterCoarsestGAMGProcAgglomeration::agglomerate(), UPstream::allProcs(), meshRefinement::balance(), faPatch::boundaryProcs(), faMesh::boundaryProcs(), faPatch::boundaryProcSizes(), faMesh::boundaryProcSizes(), mapDistributeBase::calcCompactAddressing(), decomposedBlockData::calcNumProcs(), surfaceNoise::calculate(), meshRefinement::checkCoupledFaceZones(), mappedPatchBase::collectSamples(), fieldValue::combineFields(), sizeDistribution::combineFields(), GAMGAgglomeration::continueAgglomerating(), fvMeshDistribute::countCells(), Foam::createReconstructMap(), meshRefinement::directionalRefineCandidates(), fvMeshDistribute::distribute(), distributedTriSurfaceMesh::distribute(), snappyLayerDriver::doLayers(), mapDistributeBase::exchangeAddressing(), Foam::PstreamDetail::exchangeConsensus(), extendedUpwindCellToFaceStencil::extendedUpwindCellToFaceStencil(), distributedTriSurfaceMesh::findNearest(), mappedPatchBase::findSamples(), lduPrimitiveMesh::gather(), decomposedBlockData::gather(), externalCoupled::gatherAndCombine(), decomposedBlockData::gatherSlaveData(), fileOperation::getGlobalHostIORanks(), Foam::getHostGroupIds(), Foam::getSelectedProcs(), distributedTriSurfaceMesh::getVolumeType(), InjectedParticleInjection< CloudType >::initialise(), InjectedParticleDistributionInjection< CloudType >::initialise(), viewFactor::initialise(), UPstream::is_parallel(), UPstream::lastSlave(), UPstream::linearCommunication(), distributedTriSurfaceMesh::localQueries(), fileOperation::lookupAndCacheProcessorsPath(), LUscalarMatrix::LUscalarMatrix(), mapDistributeBase::mapDistributeBase(), masterUncollatedFileOperation::masterUncollatedFileOperation(), masterUncollatedFileOperation::NewIFstream(), InflationInjection< CloudType >::parcelsToInject(), argList::parse(), pointHistory::pointHistory(), mapDistributeBase::printLayout(), meshRefinement::printMeshInfo(), fileOperation::printRanks(), processorTopology::procAdjacency(), triangulatedPatch::randomGlobalPoint(), masterUncollatedFileOperation::readHeader(), masterUncollatedFileOperation::readStream(), meshRefinement::refineCandidates(), Foam::regionSum(), meshRefinement::removeGapCells(), parProfiling::report(), profilingPstream::report(), faMeshBoundaryHalo::reset(), fvMeshSubset::reset(), globalIndex::reset(), mapDistributeBase::schedule(), Time::setControls(), zoneDistribute::setUpCommforZone(), ParSortableList< Type >::sort(), UPstream::subProcs(), fileOperation::subRanks(), UPstream::treeCommunication(), trackingInverseDistance::update(), inverseDistance::update(), oversetFvMeshBase::updateAddressing(), turbulentDFSEMInletFvPatchVectorField::updateCoeffs(), globalMeshData::updateMesh(), UPstream::whichCommunication(), decomposedBlockData::writeBlocks(), externalCoupled::writeGeometry(), isoAdvection::writeIsoFaces(), caseInfo::writeMeta(), and streamLineBase::writeToFile().

|

inlinestatic |

Rank of this process in the communicator (starting from masterNo()). Can be negative if the process is not a rank in the communicator.

Definition at line 1074 of file UPstream.H.

Referenced by surfaceZonesInfo::addCellZonesToMesh(), surfaceZonesInfo::addFaceZonesToMesh(), eagerGAMGProcAgglomeration::agglomerate(), manualGAMGProcAgglomeration::agglomerate(), masterCoarsestGAMGProcAgglomeration::agglomerate(), UPstream::allocateIntraHostCommunicator(), faPatch::boundaryProcs(), faMesh::boundaryProcs(), faPatch::boundaryProcSizes(), faMesh::boundaryProcSizes(), mapDistributeBase::calcCompactAddressing(), globalIndex::calcOffset(), globalIndex::calcRange(), faceAreaWeightAMI::calculate(), viewFactor::calculate(), GAMGAgglomeration::calculateRegionMaster(), meshRefinement::checkCoupledFaceZones(), mappedPatchBase::collectSamples(), fieldValue::combineFields(), sizeDistribution::combineFields(), wallDistAddressing::correct(), Foam::createReconstructMap(), noDecomp::decompose(), fvMeshDistribute::distribute(), distributedTriSurfaceMesh::distribute(), mapDistributeBase::exchangeAddressing(), Foam::PstreamDetail::exchangeBuf(), Foam::PstreamDetail::exchangeChunkedBuf(), Foam::PstreamDetail::exchangeConsensus(), Foam::PstreamDetail::exchangeContainer(), processorField::execute(), InjectionModel< CloudType >::findCellAtPosition(), patchProbes::findElements(), probes::findElements(), mappedPatchBase::findLocalSamples(), distributedTriSurfaceMesh::findNearest(), mappedPatchBase::findSamples(), globalIndex::gather(), externalCoupled::gatherAndCombine(), decomposedBlockData::gatherSlaveData(), zoneDistribute::getDatafromOtherProc(), Foam::getSelectedProcs(), InjectedParticleInjection< CloudType >::initialise(), InjectedParticleDistributionInjection< CloudType >::initialise(), viewFactor::initialise(), globalIndex::inplaceToGlobal(), globalIndex::isLocal(), lduPrimitiveMesh::lduPrimitiveMesh(), globalIndex::localEnd(), globalIndex::localSize(), globalIndex::localStart(), mapDistribute::mapDistribute(), mapDistributeBase::mapDistributeBase(), masterUncollatedFileOperation::masterUncollatedFileOperation(), PstreamBuffers::maxNonLocalRecvCount(), globalIndex::maxNonLocalSize(), processorColour::myColour(), UPstream::myWorld(), UPstream::myWorldID(), masterUncollatedFileOperation::NewIFstream(), regionSplit::nLocalRegions(), InflationInjection< CloudType >::parcelsToInject(), argList::parse(), pointHistory::pointHistory(), mapDistributeBase::printLayout(), fileOperation::printRanks(), processorTopology::procAdjacency(), backgroundMeshDecomposition::procBounds(), triangulatedPatch::randomGlobalPoint(), globalIndex::range(), masterUncollatedFileOperation::read(), masterUncollatedFileOperation::readHeader(), masterUncollatedFileOperation::readStream(), indexedVertex< Gt, Vb >::referred(), mapDistributeBase::renumber(), faMeshBoundaryHalo::reset(), fvMeshSubset::reset(), globalIndex::reset(), mapDistributeBase::schedule(), cellSetOption::setCellSelection(), Time::setControls(), patchInjectionBase::setPositionAndCell(), zoneDistribute::setUpCommforZone(), ParSortableList< Type >::sort(), KinematicSurfaceFilm< CloudType >::splashInteraction(), fileOperation::subRanks(), masterUncollatedFileOperation::sync(), globalIndex::toGlobal(), globalIndex::toLocal(), trackingInverseDistance::update(), inverseDistance::update(), oversetFvMeshBase::updateAddressing(), patchInjectionBase::updateMesh(), processorField::updateMesh(), propellerInfo::updateSampleDiskCells(), dynamicCode::waitForFile(), globalIndex::whichProcID(), meshToMeshMethod::writeConnectivity(), AMIInterpolation::writeFaceConnectivity(), externalCoupled::writeGeometry(), isoAdvection::writeIsoFaces(), and fileWriter::writeProcIDs().

|

inlinestatic |

True if process corresponds to the master rank in the communicator.

Definition at line 1082 of file UPstream.H.

References UPstream::masterNo().

Referenced by abaqusMeshSet::abaqusMeshSet(), regIOobject::addWatch(), masterUncollatedFileOperation::addWatch(), masterUncollatedFileOperation::addWatches(), Pstream::broadcast(), Foam::broadcastFile_recursive(), Foam::broadcastFile_single(), Pstream::broadcastList(), Pstream::broadcasts(), mappedPatchBase::calcMapping(), decomposedBlockData::calcNumProcs(), pointNoise::calculate(), viewFactor::calculate(), surfaceNoise::calculate(), writeFile::canResetFile(), writeFile::canWriteHeader(), writeFile::canWriteToFile(), argList::check(), fileWriter::checkFormatterValidity(), argList::checkRootCase(), extractEulerianParticles::collectParticle(), sizeDistribution::combineFields(), logFiles::createFiles(), Foam::createReconstructMap(), simpleGeomDecomp::decompose(), masterUncollatedFileOperation::dirPath(), systemCall::dispatch(), distributedTriSurfaceMesh::distribute(), distributedTriSurfaceMesh::distributedTriSurfaceMesh(), snappyVoxelMeshDriver::doRefine(), abort::end(), ensightSurfaceReader::ensightSurfaceReader(), abort::execute(), wallHeatFlux::execute(), Curle::execute(), momentum::execute(), externalFileCoupler::externalFileCoupler(), masterUncollatedFileOperation::filePath(), logFiles::files(), probes::findElements(), masterUncollatedFileOperation::findInstance(), masterUncollatedFileOperation::findTimes(), masterUncollatedFileOperation::findWatch(), STDMD::fit(), lduPrimitiveMesh::gather(), decomposedBlockData::gather(), externalCoupled::gatherAndCombine(), decomposedBlockData::gatherSlaveData(), coordSet::gatherSort(), ensightSurfaceReader::geometry(), masterUncollatedFileOperation::getFile(), fileOperation::getGlobalHostIORanks(), Foam::getHostGroupIds(), Foam::getSelectedProcs(), masterUncollatedFileOperation::getState(), Random::globalGaussNormal(), Random::globalPosition(), Random::globalRandomise01(), Random::globalSample01(), surfaceNoise::initialise(), viewFactor::initialise(), fileOperation::isIOrank(), JobInfo::JobInfo(), fileOperation::lookupAndCacheProcessorsPath(), LUscalarMatrix::LUscalarMatrix(), NURBS3DVolume::makeFolders(), error::master(), messageStream::masterStream(), surfaceWriter::mergeFieldTemplate(), ensightCase::newCloud(), writeFile::newFile(), writeFile::newFileAtTime(), ensightCase::newGeometry(), masterUncollatedFileOperation::NewIFstream(), faMeshTools::newMesh(), fvMeshTools::newMesh(), fileOperation::nProcs(), objectiveManager::objectiveManager(), fileWriter::open(), InflationInjection< CloudType >::parcelsToInject(), argList::parse(), pointHistory::pointHistory(), porosityModel::porosityModel(), probes::prepare(), UPstream::printCommTree(), meshRefinement::printMeshInfo(), fileOperation::printRanks(), pointNoise::processData(), timeActivatedFileUpdate::read(), decomposedBlockData::read(), writeFile::read(), uncollatedFileOperation::read(), externalCoupled::read(), sampledSets::read(), sampledSurfaces::read(), masterUncollatedFileOperation::read(), decomposedBlockData::readBlocks(), baseIOdictionary::readData(), lumpedPointState::readData(), masterUncollatedFileOperation::readHeader(), masterUncollatedFileOperation::readObjects(), masterUncollatedFileOperation::readStream(), surfaceNoise::readSurfaceData(), externalCoupled::removeDataMaster(), externalCoupled::removeDataSlave(), masterUncollatedFileOperation::removeWatch(), profilingPstream::report(), globalIndex::reset(), logFiles::resetNames(), mapDistributeBase::schedule(), faMatrix< Type >::setReference(), ensightCase::setTime(), masterUncollatedFileOperation::setUnmodified(), globalMeshData::sharedPoints(), shortestPathSet::shortestPathSet(), externalFileCoupler::shutdown(), snappyVoxelMeshDriver::snappyVoxelMeshDriver(), rigidBodyMeshMotionSolver::solve(), rigidBodyMeshMotion::solve(), rigidBodyMotion::solve(), ParSortableList< Type >::sort(), messageStream::stream(), surfaceNoise::surfaceAverage(), hexRef8Data::sync(), masterUncollatedFileOperation::sync(), syncTools::syncEdgeMap(), syncTools::syncPointMap(), triSurfaceMesh::triSurfaceMesh(), sixDoFRigidBodyMotion::update(), lumpedPointDisplacementPointPatchVectorField::updateCoeffs(), activePressureForceBaffleVelocityFvPatchVectorField::updateCoeffs(), electrostaticDepositionFvPatchScalarField::updateCoeffs(), fileMonitor::updateStates(), masterUncollatedFileOperation::updateStates(), solution::upgradeSolverDict(), externalFileCoupler::useMaster(), externalFileCoupler::useSlave(), OFstreamCollator::waitAll(), externalFileCoupler::waitForMaster(), externalFileCoupler::waitForSlave(), histogramModel::write(), proxyWriter::write(), timeInfo::write(), debugWriter::write(), energySpectrum::write(), x3dWriter::write(), starcdWriter::write(), OFstreamCollator::write(), foamWriter::write(), rawWriter::write(), yPlus::write(), referenceTemperature::write(), wallShearStress::write(), abaqusWriter::write(), vtkWriter::write(), boundaryDataWriter::write(), nastranWriter::write(), caseInfo::write(), vtkCloud::write(), sizeDistribution::write(), NURBS3DCurve::write(), effectivenessTable::write(), volFieldValue::write(), vtkWrite::write(), regionSizeDistribution::write(), objective::write(), NURBS3DSurface::write(), surfaceFieldValue::write(), propellerInfo::writeAxialWake(), decomposedBlockData::writeBlocks(), volumetricBSplinesDesignVariables::writeBounds(), ensightWriter::writeCollated(), updateMethod::writeCorrection(), NURBS3DVolume::writeCps(), decomposedBlockData::writeData(), lumpedPointMovement::writeData(), lumpedPointMovement::writeForcesAndMomentsVTP(), externalCoupled::writeGeometry(), objective::writeInstantaneousSeparator(), objective::writeInstantaneousValue(), isoAdvection::writeIsoFaces(), objective::writeMeanValue(), SQPBase::writeMeritFunction(), faMeshReconstructor::writeMesh(), patchMeshWriter::writeNeighIDs(), collatedFileOperation::writeObject(), decomposedBlockData::writeObject(), patchMeshWriter::writePatchIDs(), patchMeshWriter::writePoints(), fileWriter::writeProcIDs(), surfaceNoise::writeSurfaceData(), foamWriter::writeTemplate(), starcdWriter::writeTemplate(), boundaryDataWriter::writeTemplate(), x3dWriter::writeTemplate(), debugWriter::writeTemplate(), vtkWriter::writeTemplate(), streamLineBase::writeToFile(), GCMMA::writeToFiles(), ensightWriter::writeUncollated(), NURBS3DSurface::writeVTK(), propellerInfo::writeWake(), AMIWeights::writeWeightField(), NURBS3DCurve::writeWParses(), and NURBS3DSurface::writeWParses().

|

inlinestatic |

True if process corresponds to any rank (master or sub-rank) in the given communicator.

Definition at line 1091 of file UPstream.H.

Referenced by Foam::PstreamDetail::exchangeConsensus(), and UPstream::is_parallel().

|

inlinestatic |

True if process corresponds to a sub-rank in the given communicator.

Definition at line 1099 of file UPstream.H.

References UPstream::masterNo().

Referenced by Foam::broadcastFile_recursive(), Foam::broadcastFile_single(), and Pstream::broadcastList().

|

inlinestatic |

True if parallel algorithm or exchange is required.

This is when parRun() == true, the process corresponds to a rank in the communicator and there is more than one rank in the communicator

Definition at line 1111 of file UPstream.H.

References UPstream::is_rank(), and UPstream::nProcs().

Referenced by Pstream::broadcast(), Pstream::broadcastList(), Pstream::broadcasts(), globalIndex::calcOffset(), globalIndex::calcRange(), IOobjectList::checkNames(), Foam::PstreamDetail::exchangeConsensus(), Foam::reduce(), and Foam::sumReduce().

|

inlinestatic |

The parent communicator.

Definition at line 1122 of file UPstream.H.

Referenced by UPstream::baseProcNo(), UPstream::procNo(), and UPstream::communicator::reset().

|

inlinestatic |

The list of ranks within a given communicator.

Definition at line 1130 of file UPstream.H.

Referenced by UPstream::baseProcNo(), Foam::operator<<(), UList< Foam::vector >::operator[](), collatedFileOperation::processorsDir(), UPstream::procNo(), and UPstream::commsStruct::reset().

|

inlinestaticnoexcept |

All worlds.

Definition at line 1141 of file UPstream.H.

Referenced by mappedPatchBase::calcMapping(), mappedPatchBase::masterWorld(), argList::parse(), Foam::printDOT(), and mappedPatchBase::sameWorld().

|

inlinestaticnoexcept |

The indices into allWorlds for all processes.

Definition at line 1149 of file UPstream.H.

|

inlinestatic |

My worldID.

Definition at line 1157 of file UPstream.H.

References UPstream::commGlobal(), and UPstream::myProcNo().

Referenced by mappedPatchBase::calcMapping(), multiWorldConnections::createComms(), and mappedPatchBase::masterWorld().

|

inlinestatic |

My world.

Definition at line 1165 of file UPstream.H.

References UPstream::commGlobal(), and UPstream::myProcNo().

Referenced by multiWorldConnections::addConnectionById(), multiWorldConnections::addConnectionByName(), mappedPatchBase::calcMapping(), argList::parse(), mappedPatchBase::sameWorld(), and mappedPatchBase::sampleMesh().

Range of process indices for all processes.

Definition at line 1176 of file UPstream.H.

References UPstream::nProcs().

Referenced by refinementHistory::distribute(), Foam::getSelectedProcs(), viewFactor::initialise(), ParSortableList< Type >::sort(), energySpectrum::write(), and externalCoupled::writeGeometry().

Range of process indices for sub-processes.

Definition at line 1185 of file UPstream.H.

References UPstream::nProcs().

Referenced by lduPrimitiveMesh::gather(), LUscalarMatrix::LUscalarMatrix(), masterUncollatedFileOperation::NewIFstream(), argList::parse(), decomposedBlockData::readBlocks(), mapDistributeBase::schedule(), globalMeshData::sharedPoints(), syncTools::syncEdgeMap(), syncTools::syncPointMap(), patchMeshWriter::writeNeighIDs(), patchMeshWriter::writePatchIDs(), and patchMeshWriter::writePoints().

|

static |

Communication schedule for linear all-to-master (proc 0)

Definition at line 646 of file UPstream.C.

References UPstream::nProcs().

Referenced by UPstream::whichCommunication().

|

static |

Communication schedule for tree all-to-master (proc 0)

Definition at line 659 of file UPstream.C.

References UPstream::nProcs().

Referenced by UPstream::whichCommunication().

|

inlinestatic |

Communication schedule for linear/tree all-to-master (proc 0). Chooses based on the value of UPstream::nProcsSimpleSum.

Definition at line 1213 of file UPstream.H.

References UPstream::linearCommunication(), UPstream::nProcs(), UPstream::nProcsSimpleSum, and UPstream::treeCommunication().

Referenced by UPstream::printCommTree(), Foam::reduce(), and Pstream::scatter().

|

inlinestaticnoexcept |

Message tag of standard messages.

Definition at line 1229 of file UPstream.H.

Referenced by solidAbsorption::a(), mappedPatchBase::calcMapping(), faceAreaWeightAMI::calculate(), AMIInterpolation::calculate(), advancingFrontAMI::checkPatches(), masterUncollatedFileOperation::chMod(), mappedPatchBase::collectSamples(), extendedCentredCellToFaceStencil::compact(), extendedCentredFaceToCellStencil::compact(), extendedCentredCellToCellStencil::compact(), GAMGAgglomeration::continueAgglomerating(), masterUncollatedFileOperation::cp(), masterUncollatedFileOperation::dirPath(), mappedPatchBase::distribute(), solidAbsorption::e(), Foam::PstreamDetail::exchangeChunkedBuf(), masterUncollatedFileOperation::exists(), masterUncollatedFileOperation::filePath(), masterUncollatedFileOperation::fileSize(), distributedTriSurfaceMesh::findNearest(), mappedPatchBase::findSamples(), lduPrimitiveMesh::gather(), Foam::gAverage(), distributedTriSurfaceMesh::getVolumeType(), Foam::gSumCmptProd(), Foam::gSumProd(), masterUncollatedFileOperation::highResLastModified(), viewFactor::initialise(), regionModel::interRegionAMI(), masterUncollatedFileOperation::isDir(), masterUncollatedFileOperation::isFile(), masterUncollatedFileOperation::lastModified(), lduPrimitiveMesh::lduPrimitiveMesh(), masterUncollatedFileOperation::ln(), LUscalarMatrix::LUscalarMatrix(), surfaceWriter::mergeFieldTemplate(), masterUncollatedFileOperation::mkDir(), masterUncollatedFileOperation::mode(), masterUncollatedFileOperation::mv(), masterUncollatedFileOperation::mvBak(), masterUncollatedFileOperation::NewIFstream(), fileOperation::printRanks(), processorTopology::procAdjacency(), decomposedBlockData::readBlocks(), masterUncollatedFileOperation::readDir(), masterUncollatedFileOperation::readHeader(), masterUncollatedFileOperation::readStream(), surfaceNoise::readSurfaceData(), fvMatrix< Type >::relax(), faMeshBoundaryHalo::reset(), mappedPatchBase::reverseDistribute(), masterUncollatedFileOperation::rm(), masterUncollatedFileOperation::rmDir(), mapDistributeBase::schedule(), surfaceNoise::surfaceAverage(), processorFvPatch::tag(), processorFaPatch::tag(), processorCyclicPolyPatch::tag(), processorPolyPatch::tag(), masterUncollatedFileOperation::type(), oversetFvMeshBase::updateAddressing(), wideBandDiffusiveRadiationMixedFvPatchScalarField::updateCoeffs(), mappedVelocityFluxFixedValueFvPatchField::updateCoeffs(), mappedFlowRateFvPatchVectorField::updateCoeffs(), MarshakRadiationFvPatchScalarField::updateCoeffs(), filmPyrolysisTemperatureCoupledFvPatchScalarField::updateCoeffs(), filmPyrolysisVelocityCoupledFvPatchVectorField::updateCoeffs(), MarshakRadiationFixedTemperatureFvPatchScalarField::updateCoeffs(), greyDiffusiveRadiationMixedFvPatchScalarField::updateCoeffs(), alphatFilmWallFunctionFvPatchScalarField::updateCoeffs(), thermalBaffle1DFvPatchScalarField< solidType >::updateCoeffs(), turbulentTemperatureCoupledBaffleMixedFvPatchScalarField::updateCoeffs(), turbulentTemperatureRadCoupledMixedFvPatchScalarField::updateCoeffs(), globalMeshData::updateMesh(), OFstreamCollator::write(), decomposedBlockData::writeBlocks(), and surfaceNoise::writeSurfaceData().

|

inlinestaticnoexcept |

Set the message tag for standard messages.

Definition at line 1239 of file UPstream.H.

|

inlinestaticnoexcept |

Increment the message tag for standard messages.

Definition at line 1251 of file UPstream.H.

Referenced by solidAbsorption::a(), solidAbsorption::e(), regionModel::interRegionAMI(), wideBandDiffusiveRadiationMixedFvPatchScalarField::updateCoeffs(), mappedVelocityFluxFixedValueFvPatchField::updateCoeffs(), mappedFlowRateFvPatchVectorField::updateCoeffs(), MarshakRadiationFvPatchScalarField::updateCoeffs(), filmPyrolysisTemperatureCoupledFvPatchScalarField::updateCoeffs(), filmPyrolysisVelocityCoupledFvPatchVectorField::updateCoeffs(), MarshakRadiationFixedTemperatureFvPatchScalarField::updateCoeffs(), greyDiffusiveRadiationMixedFvPatchScalarField::updateCoeffs(), alphatFilmWallFunctionFvPatchScalarField::updateCoeffs(), thermalBaffle1DFvPatchScalarField< solidType >::updateCoeffs(), turbulentTemperatureCoupledBaffleMixedFvPatchScalarField::updateCoeffs(), and turbulentTemperatureRadCoupledMixedFvPatchScalarField::updateCoeffs().

|

inlinenoexcept |

Get the communications type of the stream.

Definition at line 1261 of file UPstream.H.

Referenced by UIPstream::read(), UIPstream::UIPstream(), and UOPstream::write().

|

inlinenoexcept |

Set the communications type of the stream.

Definition at line 1271 of file UPstream.H.

|

static |

Shutdown (finalize) MPI as required.

Uses MPI_Abort instead of MPI_Finalize if errNo is non-zero

Definition at line 51 of file UPstream.C.

Referenced by ParRunControl::~ParRunControl().

|

static |

Call MPI_Abort with no other checks or cleanup.

Definition at line 62 of file UPstream.C.

References Foam::abort().

Referenced by error::simpleExit().

|

static |

Shutdown (finalize) MPI as required and exit program with errNo.

Definition at line 55 of file UPstream.C.

References Foam::exit().

Referenced by argList::argList(), designVariablesUpdate::checkConvergence(), Foam::exitNow(), argList::parse(), ParRunControl::runPar(), and error::simpleExit().

|

static |

Exchange int32_t data with all ranks in communicator.

non-parallel : simple copy of sendData to recvData

| [in] | sendData | The value at [proci] is sent to proci |

| [out] | recvData | The data received from the other ranks |

Definition at line 42 of file UPstreamAllToAll.C.

Referenced by fvMeshDistribute::distribute().

|

static |

Exchange non-zero int32_t data between ranks [NBX].

recvData is always initially assigned zero and no non-zero

values are sent/received from other ranks.

non-parallel : simple copy of sendData to recvData

since the implementation uses probing with ANY_SOURCE !!

An initial barrier may help to avoid synchronisation problems

caused elsewhere (See "nbx.tuning" opt switch)

| [in] | sendData | The non-zero value at [proci] is sent to proci |

| [out] | recvData | The non-zero value received from each rank |

| tag | Message tag for the communication |

Definition at line 74 of file UPstreamAllToAll.C.

|

static |

Exchange int32_t data between ranks [NBX].

recvData map is always cleared initially so a simple check

of its keys is sufficient to determine connectivity.

non-parallel : copy own rank (if it exists)

See notes about message tags and "nbx.tuning" opt switch

| [in] | sendData | The value at [proci] is sent to proci. |

| [out] | recvData | The values received from given ranks. |

| tag | Message tag for the communication |

Definition at line 74 of file UPstreamAllToAll.C.

|

inlinestatic |

Exchange int32_t data between ranks [NBX].

| [in] | sendData | The value at [proci] is sent to proci. |

| tag | Message tag for the communication |

Definition at line 1362 of file UPstream.H.

|

static |

Exchange int64_t data with all ranks in communicator.

non-parallel : simple copy of sendData to recvData

| [in] | sendData | The value at [proci] is sent to proci |

| [out] | recvData | The data received from the other ranks |

Definition at line 43 of file UPstreamAllToAll.C.

|

static |

Exchange non-zero int64_t data between ranks [NBX].

recvData is always initially assigned zero and no non-zero

values are sent/received from other ranks.

non-parallel : simple copy of sendData to recvData

since the implementation uses probing with ANY_SOURCE !!

An initial barrier may help to avoid synchronisation problems

caused elsewhere (See "nbx.tuning" opt switch)

| [in] | sendData | The non-zero value at [proci] is sent to proci |

| [out] | recvData | The non-zero value received from each rank |

| tag | Message tag for the communication |

Definition at line 75 of file UPstreamAllToAll.C.

|

static |

Exchange int64_t data between ranks [NBX].

recvData map is always cleared initially so a simple check

of its keys is sufficient to determine connectivity.

non-parallel : copy own rank (if it exists)

See notes about message tags and "nbx.tuning" opt switch

| [in] | sendData | The value at [proci] is sent to proci. |

| [out] | recvData | The values received from given ranks. |

| tag | Message tag for the communication |

Definition at line 75 of file UPstreamAllToAll.C.

|

inlinestatic |

Exchange int64_t data between ranks [NBX].

| [in] | sendData | The value at [proci] is sent to proci. |

| tag | Message tag for the communication |

Definition at line 1363 of file UPstream.H.

|

static |

Receive identically-sized char data from all ranks.

| sendData | On rank: individual value to send |

| recvData | On master: receive buffer with all values |

| count | Number of send/recv data per rank. Globally consistent! |

Definition at line 93 of file UPstreamGatherScatter.C.

Referenced by fileOperation::getGlobalHostIORanks(), Foam::getHostGroupIds(), and profilingPstream::report().

|

static |

Send identically-sized char data to all ranks.

| sendData | On master: send buffer with all values |

| recvData | On rank: individual value to receive |

| count | Number of send/recv data per rank. Globally consistent! |

Definition at line 93 of file UPstreamGatherScatter.C.

|

static |

Gather/scatter identically-sized char data.

Send data from proc slot, receive into all slots

| allData | On all ranks: the base of the data locations |

| count | Number of send/recv data per rank. Globally consistent! |

Definition at line 93 of file UPstreamGatherScatter.C.

|

static |

Receive variable length char data from all ranks.

| sendCount | Ignored on master if recvCount[0] == 0 |

| recvData | Ignored on non-root rank |

| recvCounts | Ignored on non-root rank |

| recvOffsets | Ignored on non-root rank |

Definition at line 93 of file UPstreamGatherScatter.C.

Referenced by decomposedBlockData::gather(), and decomposedBlockData::gatherSlaveData().

|

static |

Send variable length char data to all ranks.

| sendData | Ignored on non-root rank |

| sendCounts | Ignored on non-root rank |

| sendOffsets | Ignored on non-root rank |

Definition at line 93 of file UPstreamGatherScatter.C.

|

static |

Receive identically-sized int32_t data from all ranks.

| sendData | On rank: individual value to send |

| recvData | On master: receive buffer with all values |

| count | Number of send/recv data per rank. Globally consistent! |

Definition at line 94 of file UPstreamGatherScatter.C.

|

static |

Send identically-sized int32_t data to all ranks.

| sendData | On master: send buffer with all values |

| recvData | On rank: individual value to receive |

| count | Number of send/recv data per rank. Globally consistent! |

Definition at line 94 of file UPstreamGatherScatter.C.

|

static |

Gather/scatter identically-sized int32_t data.

Send data from proc slot, receive into all slots

| allData | On all ranks: the base of the data locations |

| count | Number of send/recv data per rank. Globally consistent! |